With the development of new information interaction fields such as artificial intelligence, IoT and intelligent medical care, the computer system based on traditional von Neumann architecture have become increasingly difficult to meet the needs of data processing and complex neural network model operation. Neuromorphic computing is an information-processing model that emulates the efficiency, versatility, and flexibility of the human brain. Artificial synaptic devices have been used to construct artificial neural networks for neuromorphic calculations. Various resistive and phase-change memories have been used to simulate synapses. However, in these memory techniques, synaptic intensity is reconstructed primarily through software programming and varying the pulse time, which may result in low efficiency and high energy consumption in neuromorphic calculations. Furthermore, the following challenges occur in conventional memory: excess write noise, write nonlinearity, and diffusion under zero bias pressure.

Similar to synapses, charge-based energy storage devices can perform conductance modulation and retention under low-energy conditions. The unique characteristics of ion shuttles inspired novel artificial synaptic simulation of synaptic gap information transmission for energy storage devices. Experimental results have revealed that a battery-like switch device can be used as an artificial synapse for brain-power-level low-energy calculation. However, achieving selective and linear weight updates to satisfy the requirements for neuromorphic computing with high-accuracy and self-adaption remains challenging.

Writing in

National Science Review, WANG

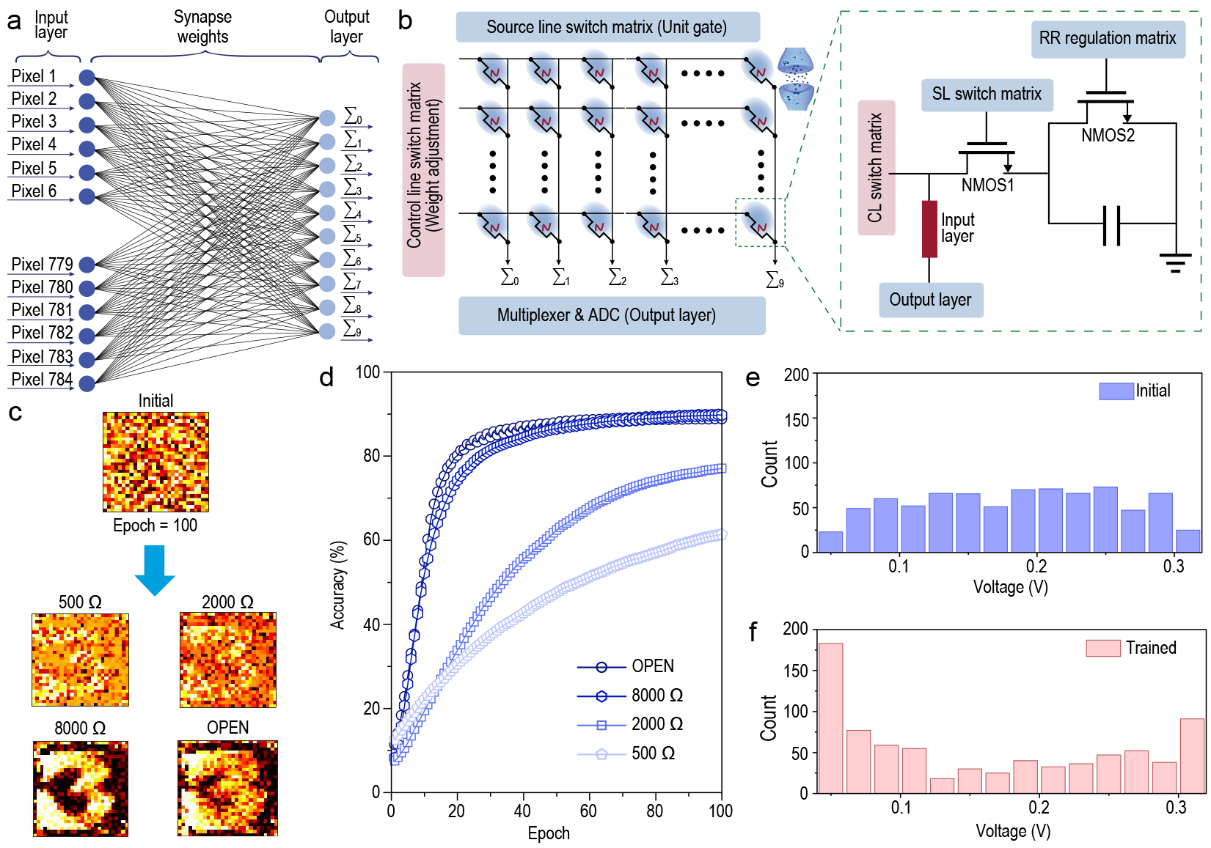

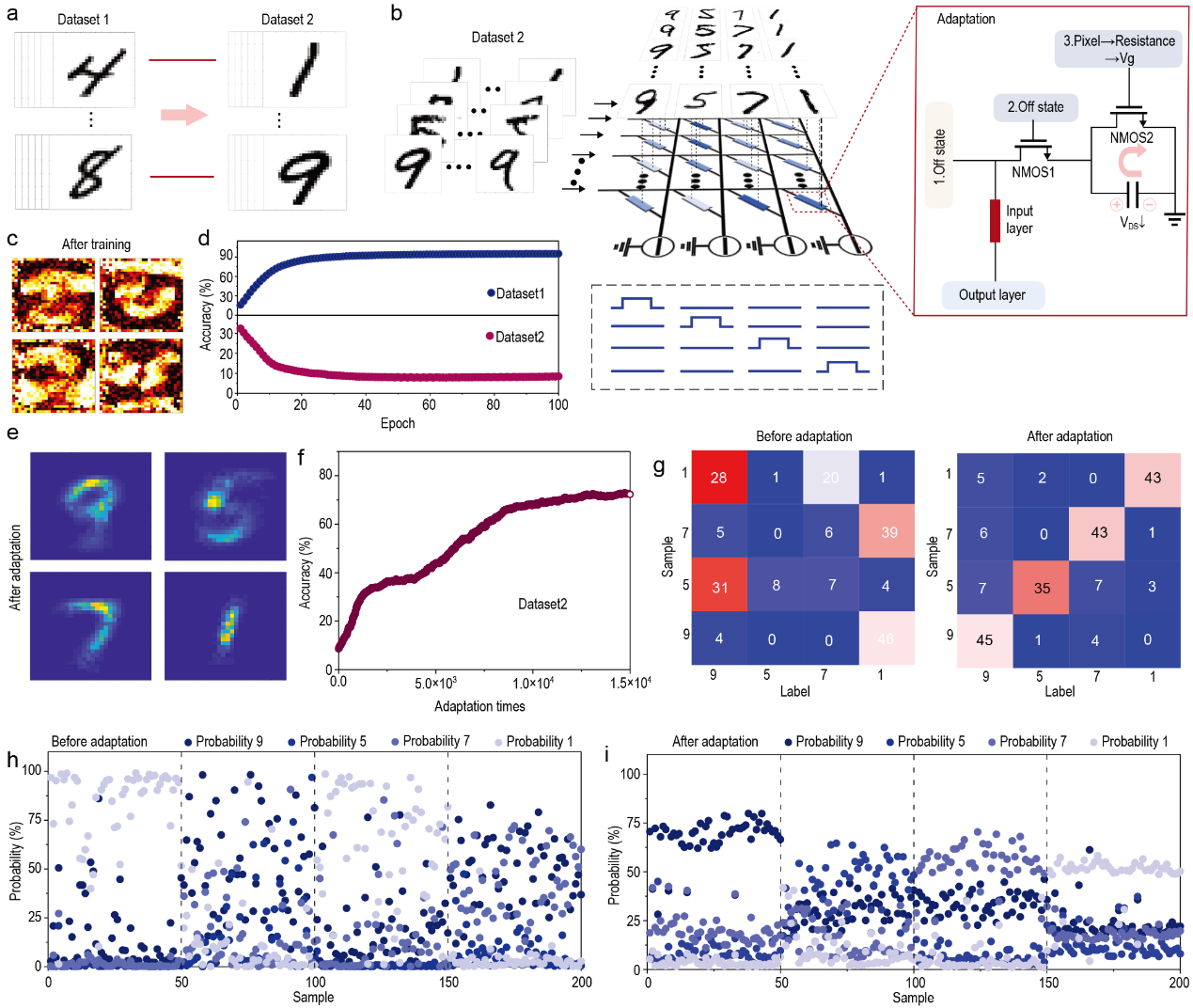

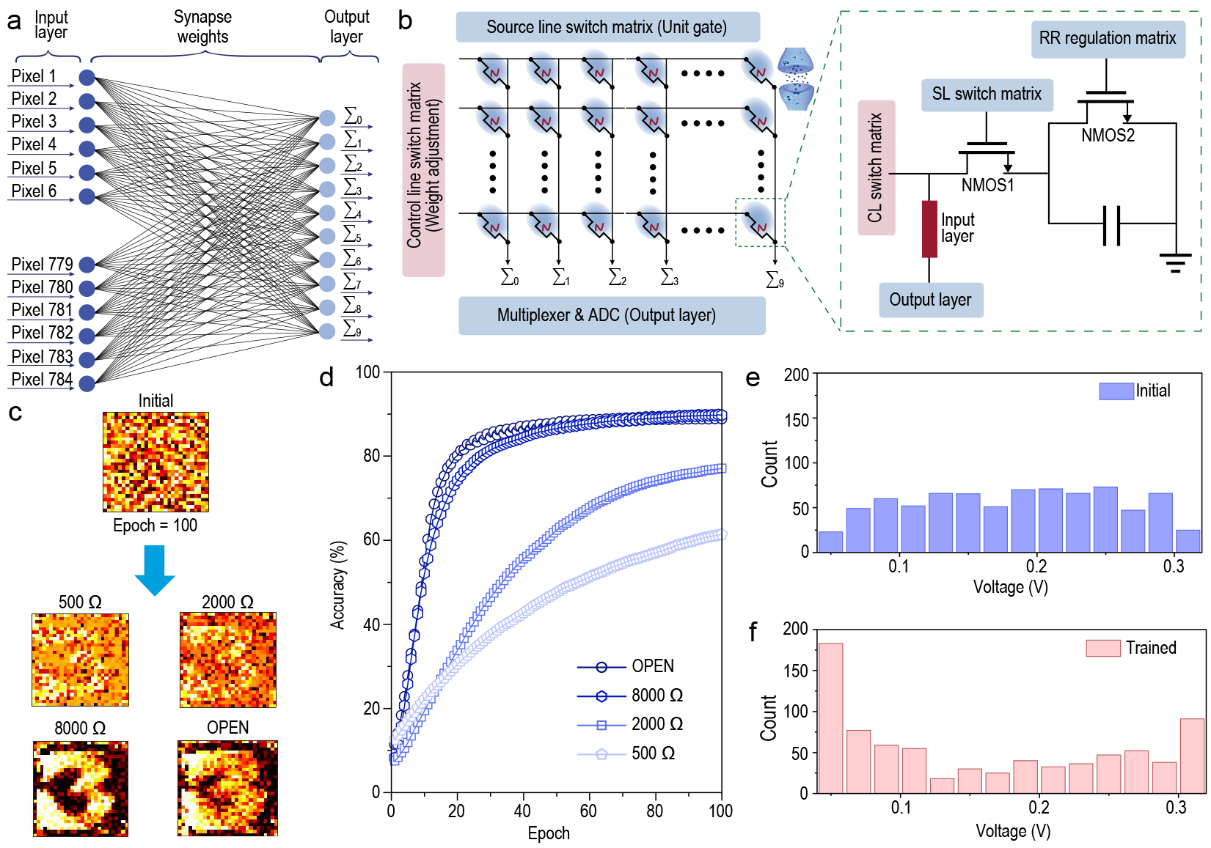

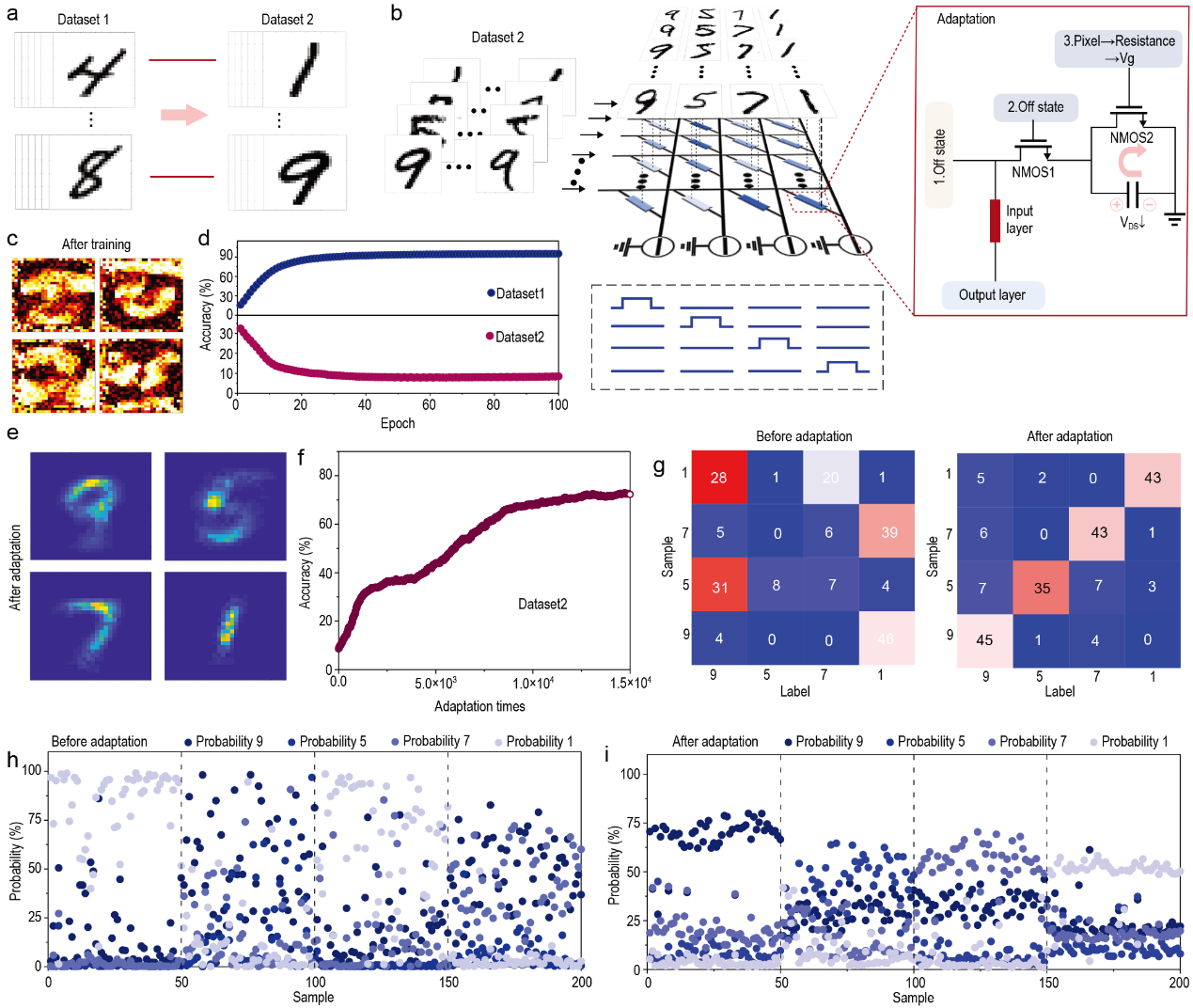

Lili from State Key Laboratory for Superlattices and Microstructures, Institute of Semiconductors, CAS, SHEN Guozhen from Beijing Institute of Technology and FAN Zhiyong from The Hong Kong University of Science and Technology proposed a novel hardware neural network based on a tunable flexible MXene energy storage (FMES) system (Figure 1). The synaptic weights, represented by w in machine learning, could be changed without varying the external stimulus. Coupling MXene and gel electrolyte could control the accumulation and dissipation of ions by tuning the resistance, which modulates w. Results of machine learning simulations prove that the synapse based on tunable FMES can be used in neuromorphic calculation tasks (e.g., number classification, and pattern recognition). For a dataset of handwritten patterns, by changing the resistance and seeking the best learning rate, the proposed system achieved a recognition accuracy of ~95% (Figure 2). Furthermore, the FMES device can be adaptively adjusted according to the stored weight value with an enhancement recognition accuracy about 80%. Therefore, the time and energy loss caused by recalculation was prevented (Figure 3). This study is crucial for simulating human memory features and artificial neural systems.

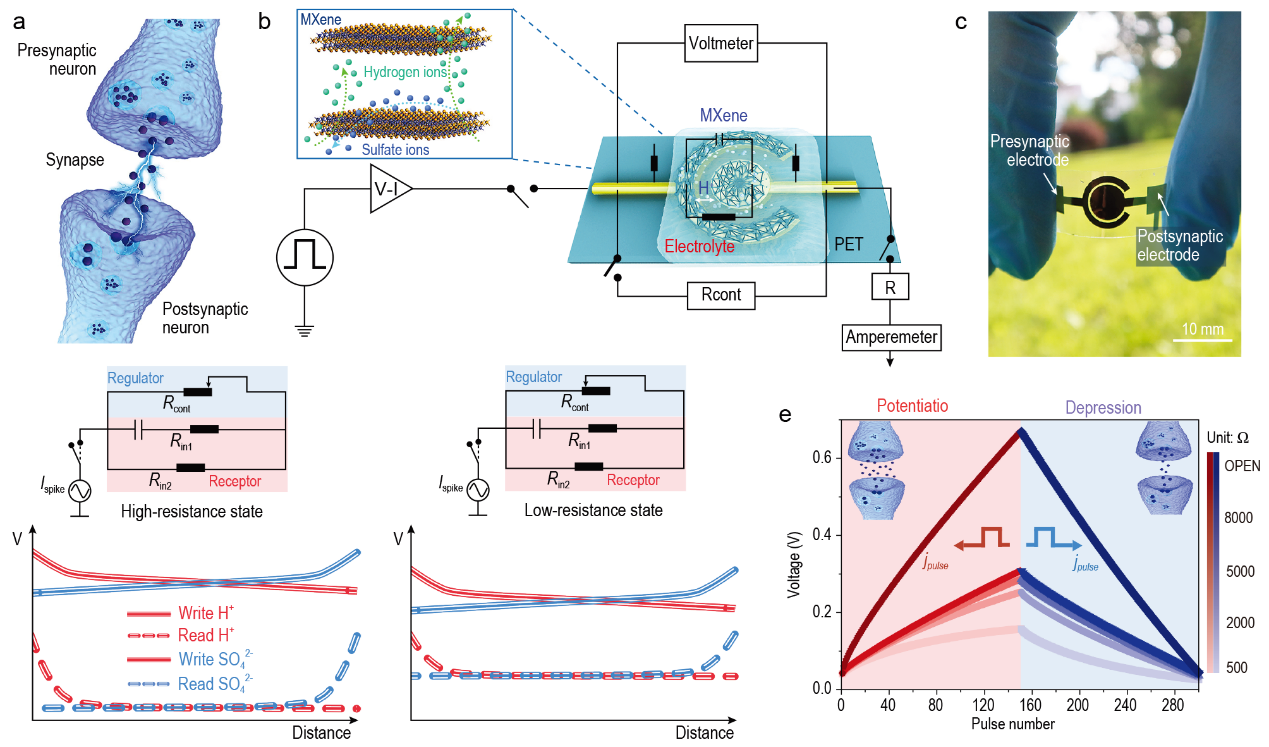

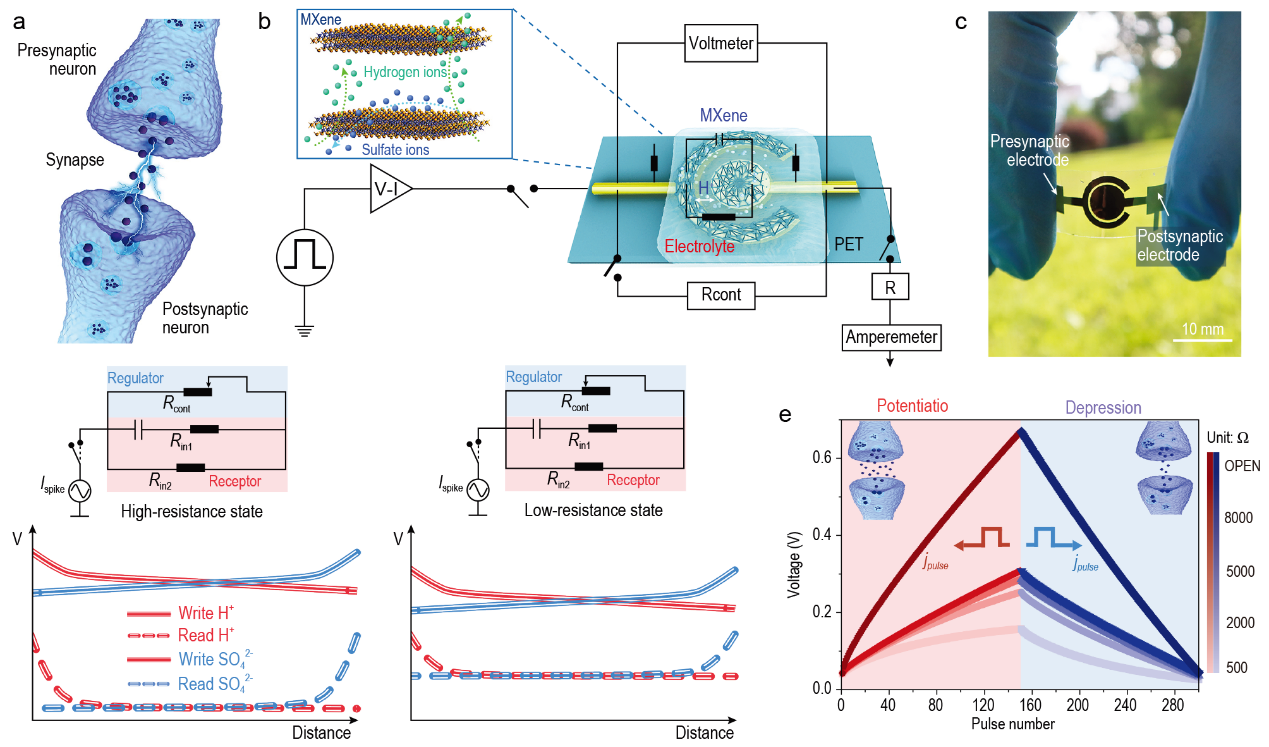

Figure 1. Structure and electronic states of FMES device. (a) Structural and functional illustration of the biological synapse. (b) Illustration of the FMES device. Inset show dynamic diffusion process. (c) Optical image of the FMES device. (d) Schematic explaining and corresponding circuit diagram the decoupling of the read and write operations. (e) Monitored postsynaptic current under several sequential voltage pulses.

Figure 2. Accuracy of neuromorphic computation. (a) Tri-layer neural network structure. (b) Hardware neural network comprising FMES devices. (c) Mapping a representative input digit of 784 synaptic weights connected to the output digit ‘3’ at initial and various resistance states of training. (d) Comparison of the classification accuracy rates at initial and 100 training cycle. Distribution of the FMES devices PSV before (e) and after training (f).

Figure 3. Adaptive handwritten digit recognition simulation. (a) Dataset1 and dataset2 extracted from MNIST. (b) Weight mapping images of the ANN. (c) The recognition accuracy of dataset1 (top) and dataset2 (down). (d) Illustration of the adaptive adjustment of ANN weights. (e) After adaptation, the weight mapping images of the ANN. (f) Relationship between the recognition accuracy of dataset2 by the ANN and the number of mapped images. (g) Classification and recognition of 200 samples by the ANN before and after adaptation. The probability of each sample in 200 samples belongs to four possible outcomes before (h) and after adaption (i).